Archive for the ‘Technology’ Category

January 29th, 2024 by

Ammu Nair The balancing art of project management – finding equilibrium between following the process and the imperative of getting it done — is paramount. Project management often gets a bad reputation in any industry; this is because of the popular view that project managers can be very rigid and too structured, adding to the project cost … Read More

Posted in IT project management, Technology | No Comments »

December 15th, 2023 by

Jimmy Bui What is the Laravel framework? Laravel is an open-source PHP framework that offers a wide range of features for web application development. PHP is a programming language used by a very large portion of websites across the globe, including all WordPress websites. Laravel provides an elegant syntax and a set of tools for common programming … Read More

Posted in Custom Technology, Online Technologies, Technology | No Comments »

January 9th, 2023 by

Heather Maloney A pragmatic and robust approach to building a strategic marketing plan for 2023 from a digital marketing leader who helps dozens of clients every year with their marketing planning and execution. Hopefully you have already started thinking about how you will be spending your marketing budget, time and resources, in 2023. If you haven’t nailed … Read More

Posted in Digital Marketing, Online Technologies, Pay Per Click, Social Media, Technology | No Comments »

January 3rd, 2023 by

Pavithra Kameswaran After a website or web application is built and launched, you can be tempted to think that all is done, and that it will keep running forever in the same manner it did on the first day. But this is not the case at all. When we buy a house, we set it up the … Read More

Posted in Technology | No Comments »

October 25th, 2022 by

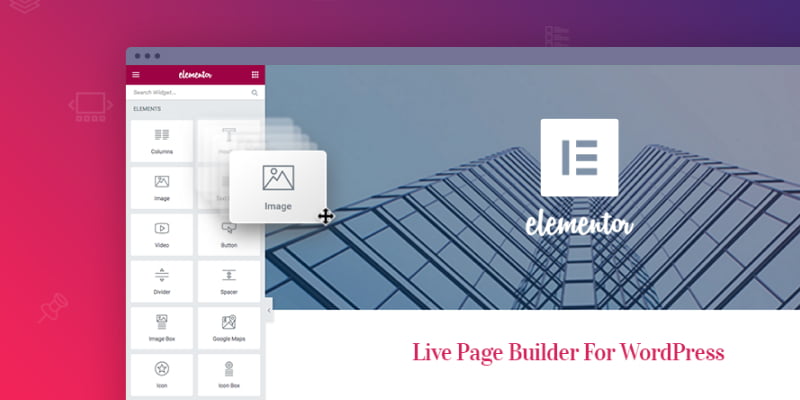

Pavithra Kameswaran What is Elementor? Elementor is a page builder solution that can be installed within WordPress, to provide efficiencies with regard to the build of a WordPress Website. The efficiencies are mainly with regard to implementing mobile responsiveness, and quickly implementing modern looking site elements. Elementor continually provides new user interface elements which are relatively quick … Read More

Posted in Custom Technology, Technology, Website Creation | No Comments »

October 4th, 2022 by

Ammu Nair Over the past few months, we have been busy upgrading our client websites to PHP version 8; the most recent significant version release. PHP is one of the most widely used programming languages on the internet, powering nearly 80% of all websites, including popular Content Management Systems like WordPress, Drupal, Magento etc. For the most … Read More

Posted in Technology, Online Technologies | No Comments »

August 15th, 2022 by

Heather Maloney It’s official: theft/fraud by redirecting payments to the scammer’s bank account increased by 77% year on year, in 2021. Invoice scams cost Australian businesses $227 Million. This article by the ACCC describes the problem in more detail. In response to increased invoicing fraud, a global framework for e-procurement (including e-invoicing) has been developed, called PEPPOL. … Read More

Posted in Online Technologies, Technology | No Comments »

July 19th, 2022 by

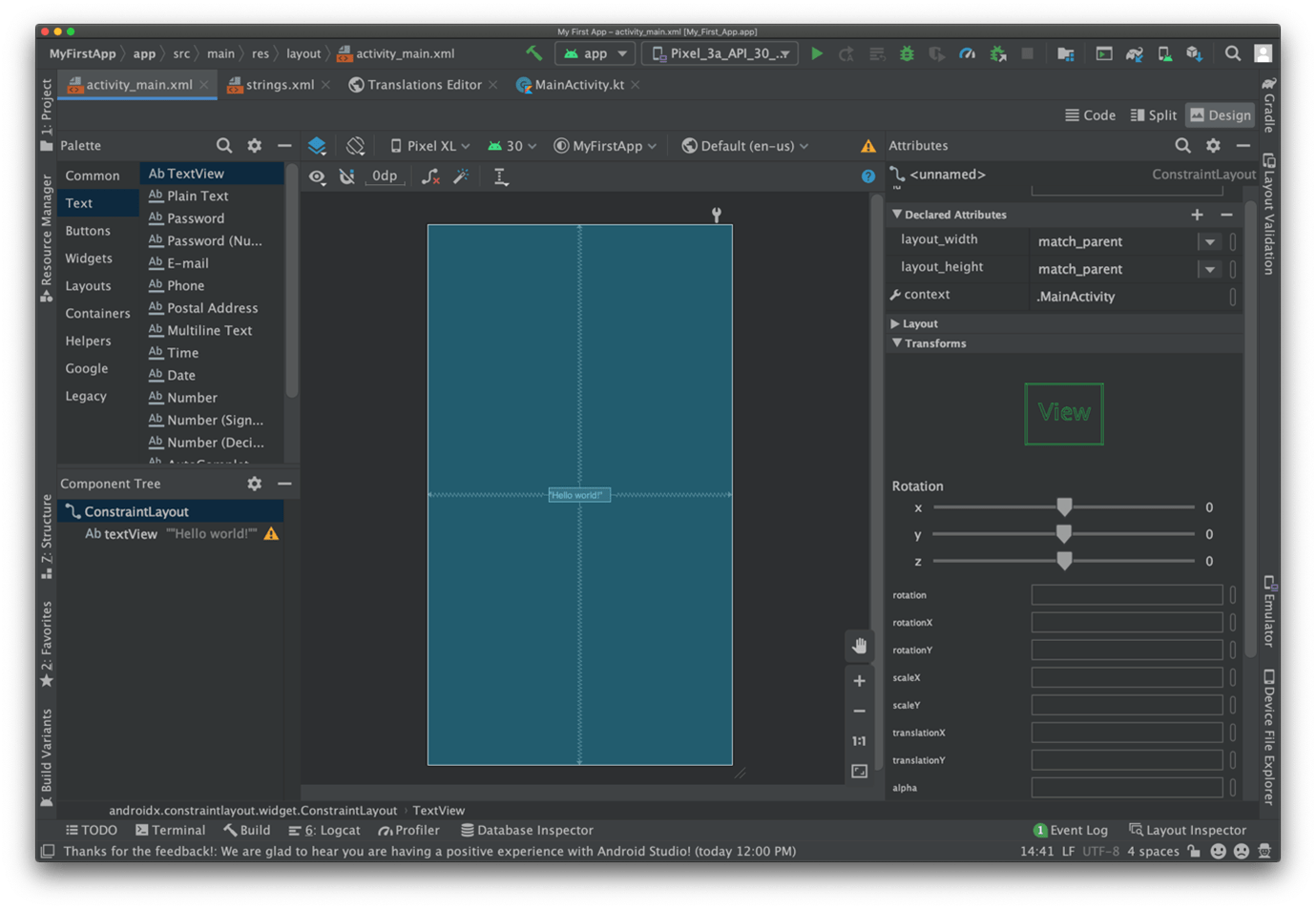

Heather Maloney You know what you want to create, and you know the people that are crying out for your app idea. Before you start down the path of building the next killer app, first let’s look at 3 tech concepts that you really need to understand. The Cloud. Your app idea may or may not need … Read More

Posted in Custom Technology, cloud computing, mobile apps, Technology | No Comments »

April 5th, 2022 by

Ishan Geeganage More 6.64 billion people across the globe are using smart phones (and the apps on them). The majority of people use mobile applications for banking, searching for and ordering from restaurants, health, news, email and engaging on social media platforms. A mobile user interface is the graphical and usually touch-sensitive display on a mobile device, … Read More

Posted in mobile apps, Custom Technology, Technology | No Comments »

March 14th, 2022 by

Heather Maloney Pinterest – what is it, and how does it work? Are you a planning a major life event? Or seeking some inspiration to redo your home? If so, then you’re probably using Pinterest. Pinterest users (aka ‘pinners’) generally create their own boards dedicated to a theme, and then pin images to that board. Mostly their … Read More

Posted in Digital Marketing, Pay Per Click, Social Media, Technology | No Comments »